Never Miss a Beat: Better CloudWatch Alarms on Metrics with Missing Data

“Everything fails all the time,” said Werner Vogels. Today’s distributed systems comprise a lot of moving parts. Each component in your system can suddenly break for a myriad of reasons. When something inevitably happens, you should be the first to know. Not your customers.

This is where alarms come in. Alarms notify you when something of importance happens. Alarms should always be actionable, and you must be able to trust them. Too many false alarms or alarms that require no action will result in alarm fatigue, a dangerous state where you start ignoring alarms.

As a heavy user of CloudWatch Alarms, I’ve found some problems with alarms on metrics that include “missing data.” Sometimes, these alarms can fail to fire or stay in the alarm state indefinitely. You might have seen this yourself and wondered how to fix it. Let’s look at some examples and a simple solution to the problem.

Example error alarms

We will look at an error alarm. The alarm should fire whenever a Lambda invocation results in an error. In the example setup, I have a Lambda function that always results in an error. EventBridge triggers the function every 15 minutes. This basic setup thus simulates an error happening every 15 minutes, and we expect the alarm to fire off every 15 minutes.

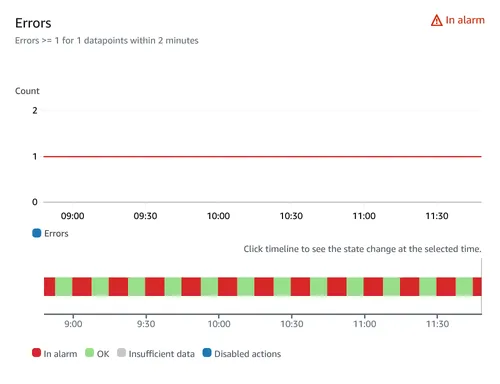

I will showcase two alarms with different periods:

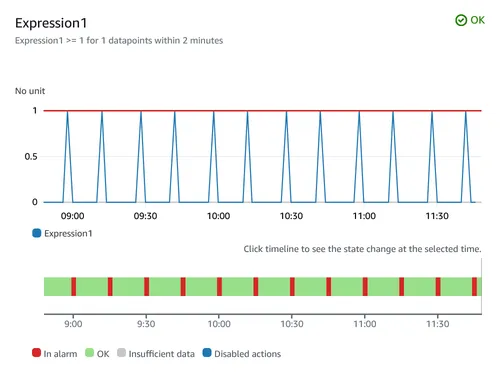

- Errors >= 1 for 1 datapoints within 2 minutes

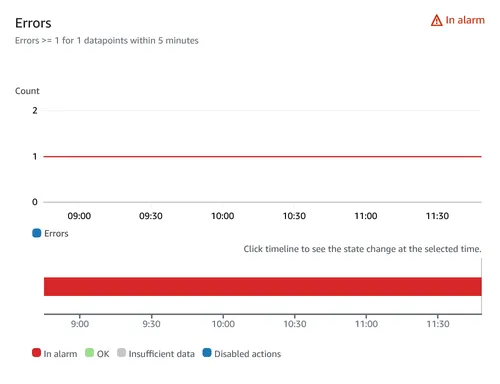

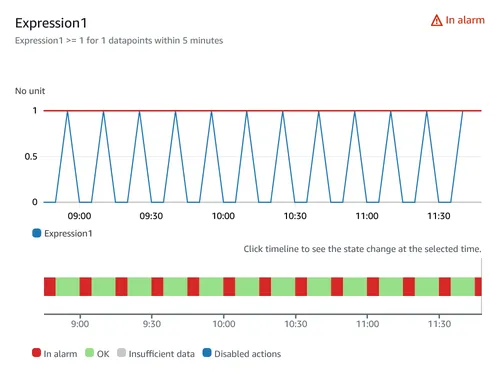

- Errors >= 1 for 1 datapoints within 5 minutes

Both are configured to treat missing data as good (not breaching threshold).

What results do you expect if the function reports a failure every fifteen minutes?

I expect CloudWatch to check the metric every 2 minutes in the first alarm. It should fire if there is an error within the last two minutes. Since it is configured to treat missing data as good, it should treat missing data as if no errors have occurred and display an OK state. Therefore, I expect a two-minute ALARM state window every fifteen minutes.

I expect similar behavior for the second alarm, except that the ALARM state window should be five minutes long every fifteen minutes.

Let’s look at reality:

What is happening here? The alarm with a two-minute window is mainly in an ALARM state, even if an error only occurs every fifteen minutes.

It’s even worse for the second alarm. It never returns to an OK state. It doesn’t look like the “treat missing data points as good” configuration does what we expect.

CloudWatch’s treatment of missing data

To find out why, we need to head to the documentation. The following quotes, though cryptic, explain it.

Whenever an alarm evaluates whether to change state, CloudWatch attempts to retrieve a higher number of data points than the number specified as Evaluation Periods. The exact number of data points it attempts to retrieve depends on the length of the alarm period and whether it is based on a metric with standard resolution or high resolution. The time frame of the data points that it attempts to retrieve is the evaluation range.

This is because alarms are designed to always go into ALARM state when the oldest available breaching datapoint during the Evaluation Periods number of data points is at least as old as the value of Datapoints to Alarm, and all other more recent data points are breaching or missing.

I find the entire section on how CloudWatch treats missing data confusing. This is my best guess on how it works:

CloudWatch attempts to retrieve data points outside your defined window. You do not have any control over this so-called evaluation range. It evaluates all of the data points in this range. If it finds one breaching data point and all other more recent data points are missing, the alarm goes to the ALARM state.

In the alarm example with a five-minute window above, the evaluation range is wide enough that it always finds the previous error. Since all other data points are missing, it ignores the “treat missing data points as good” and leaves the alarm on.

Example heartbeat alarm

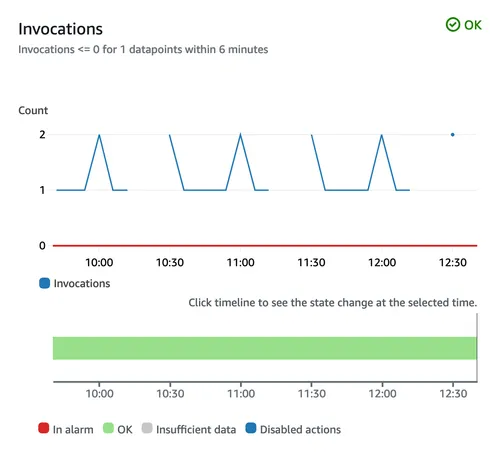

Let’s look at the effect this has on another type of alarm. Imagine a process that sends a heartbeat to your system every five minutes. If you do not receive said heartbeat, something might be wrong with the process, and you may want to raise an alarm.

I’ve simulated this with a Lambda function that I invoke every five minutes, except for XX:20 and XX:25 every hour. To be notified of missing heartbeats, I have the following alarm:

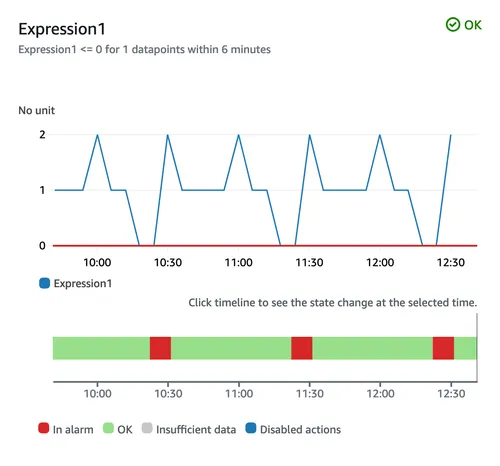

- Invocations <= 0 for 1 datapoints within 6 minutes

The period is 6 minutes to ensure that no matter when CloudWatch checks the window, it should find at least one heartbeat if everything is OK. The alarm is configured to treat missing data as bad. So, if there are no heartbeats in the last six minutes, CloudWatch should treat that as breaching and sound the alarm.

Does it?

No, it doesn’t. This case looks similar to before. The data points it does find inside the evaluation range (but outside the period) override the missing data points.

With this configuration, we would never get notified about the missing heartbeats. How do we fix this?

Math to the rescue

CloudWatch metrics have math expresions that allow you to create new time series based on other metrics. It supports basic arithmetic functions, comparison and logical operators, and built-in functions.

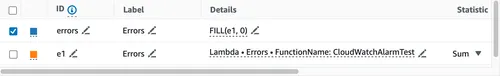

One built-in function is fill, which fills in the missing values of a time series. Using the fill function, you can specify a value to use as the filler for missing values. This way, you can ensure that the time series has no missing data points and thus avoid the CloudWatch behavior regarding missing data points.

You can create a metric with the fill function on the metrics page by clicking on Add math > Common > Fill. In the new metric, you refer to the metric for which you want to fill in values and a default value.

For the Lambda errors metric, it could look like this:

Improved error alarms

Let’s see how using the fill function can improve our error alarms. The alarms below use the same configuration as before, but the metric they query uses the fill function to fill missing data points with a 0 to indicate no errors.

Here, we can see a big difference in how snappy the alarms are. The time spent in the ALARM state closely matches the period we set for the alarm. Previously, the alarm that had a five-minute period never left the ALARM state. Now, it correctly toggles back and forth.

Improved heartbeat alarm

The example heartbeat alarm never managed to fire an alarm due to how missing data is treated. Let’s see what it looks like when the alarm instead uses a metric with the fill function, again filling empty values with 0 to indicate no invocations.

The alarm now correctly fires every hour when the simulated heartbeat stops.

Conclusion

Getting your alarms right can be tricky. This is especially true when the metrics you track might include missing data. Using math expressions in your metrics can save you headaches when you wonder why your alarms are never firing or are constantly stuck in the ALARM state.

Who said you’d never use math as an adult?